In 2022, we conducted more than 400 DDoS-StressTests, testing all possible vectors, protocols, and methods, from simple ScriptKiddy attacks to advanced RedTeaming with OSINT, target search, use of BrowserBots and MachineLearning to overcome browser challenges and captchas, as well as programming custom traffic generators.

In this article, we want to analyze and examine all tests and find out which attacks are still successful and why.

As a summary of our findings:

- TCP DirectPath / TCP Handshakes and Layer-7 attacks are the new stars

- Mitigation of UDP reflection/amplification is mastered by all providers

-

Mitigation problems mostly arose from configuration errors, not from inadequate technology

-

the higher the sophistication level, the more likely the attack will be successful

These general trends confirm the reports of vendors

Details

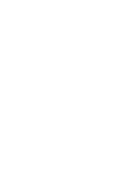

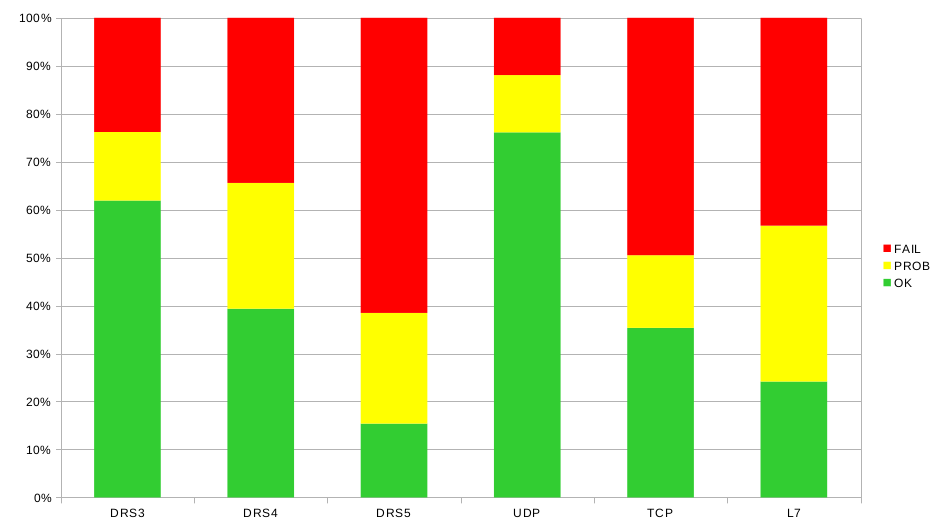

Evaluated percentage wise, it is clear that attacks with low skill level, mainly UDP reflection/amplification, are mitigated in roughly 90% of our testcases. TCP and Layer 7 attacks, whether web, IPSec, DNS, SIP, etc., still remain a problem; therefore, these types of attacks are also preferred by skilled attackers.

The higher the skill level of the attacker, the greater the problems on the defender's side, especially when considering the complexity of today's IT systems. The topic of DDoS attack surface is and remains a too often neglected point that groups like KillNet and NoName know how to use to their advantage.

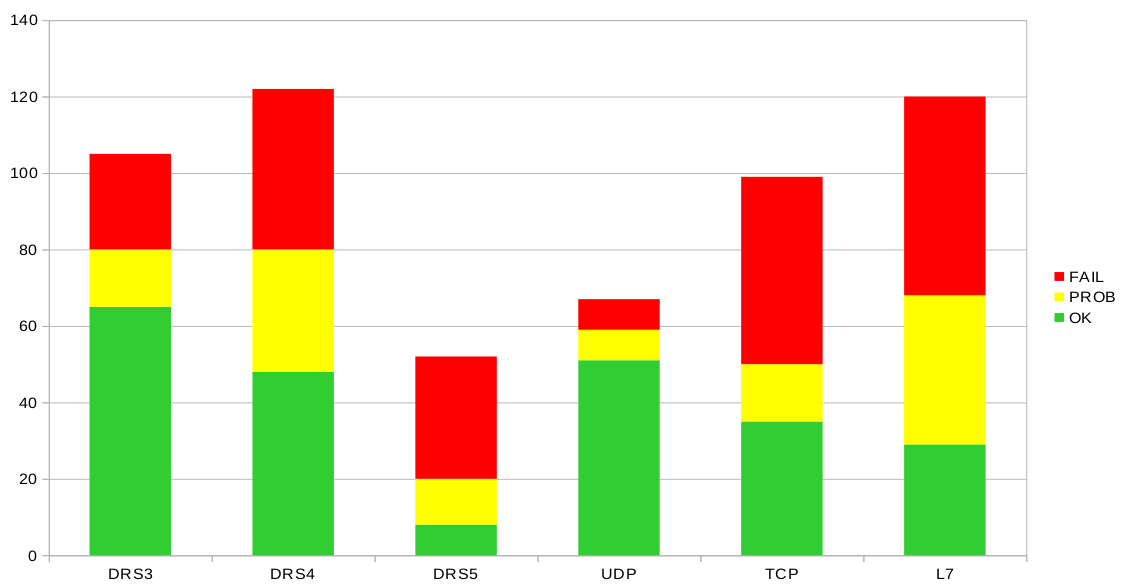

Another aspect that we have always suspected but can now support with real numbers: testing pays off. Misconfigurations are quickly recognized and can be fixed. Potential attack points can be tested before attackers do.

We accompany teams that have accepted the challenge and managed to make the huge step from "we have just implemented our DDoS mitigation" status to RedTeaming within one year.

Derivation for BlueTeams

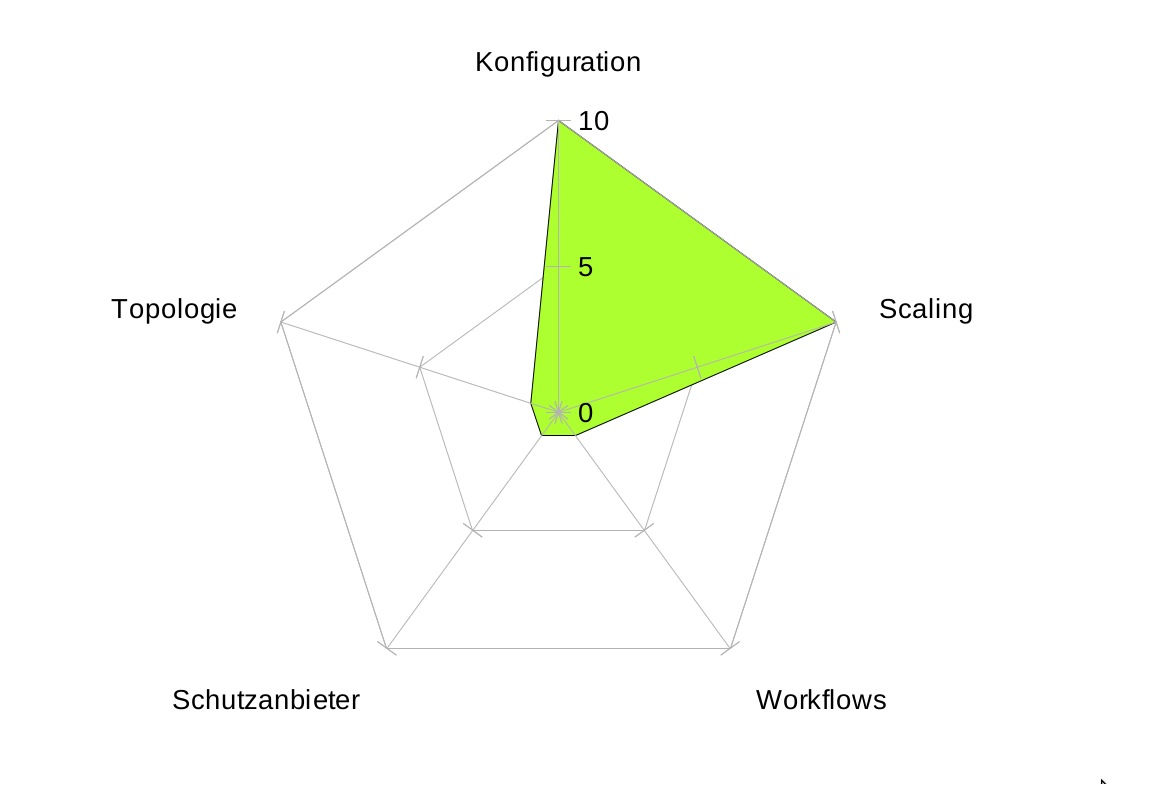

We have identified several problem areas where effective DDoS mitigation can fail. In addition to a clean architecture and implementation, organizational workflows or network topology are also crucial.

We can only recommend to every team to continuously test their own DDoS protection level and adapt it to the constantly changing threat landscape.

Testing pays off in the long run.

Methodologie

We conduct coordinated DDoS-Stresstests for our customers, mostly as functionality tests, but increasingly also as RedTeamings. We evaluate the attacks carried out on the basis of the DDoS Resiliency Score DRS (1), which can be roughly described according to the defined attacker capabilities as follows:

- DRS 3 // Stresser/Booter services, DDoS-as-a-Service

- DRS 4 // Activist groups (Killnet, Noname), DDoS extortion gangs

- DRS 5 // Advanced Attacker, DDoS-For-Hire, professionals

- DRS 6 // High-end professionals (rarely seen)

The individual DRS levels can be assigned attack types: UDP reflection, for example, is DRS 3, TCP Handshakes DRS 4, Carpet Bombing, browser attacks and multi-vector attacks with high randomization fall more into the category DRS 5.

So for all tests carried out, we have recorded the attack vector (TCP/UDP/Layer 7), the DRS level of the attack and the status. The status can be:

- OK: Attack mitigated, maximum mitigation time 30 seconds

- Prob: Problematic, attack either partially or manually mitigated, high impact for at least 10 minutes

- Fail: no mitigation

In this way, tests with the same vector and DRS level were combined and the worse result was evaluated. If we carried out 3 UDP tests according to DRS 3, two of them OK and one FAIL, then a FAIL went into the evaluation.

If all 3 passed, then an OK went into the evaluation. This also explains the discrepancy between the total tests carried out (408) and the sum according to the graph.

IPv6

We executed 10 IPv6 - Tests and ALL failed. The reasons for this shouldnt be in the open currently.

Notes

- [1] We base the DRS v2.0, which is currently under review by the DRS-Advisory-Board and places the attacker capabilities in the foreground.

Fragen? Kontakt: info@zero.bs